Vision Language Model-based Caption Evaluation Method Leveraging Visual Context Extraction

Summary

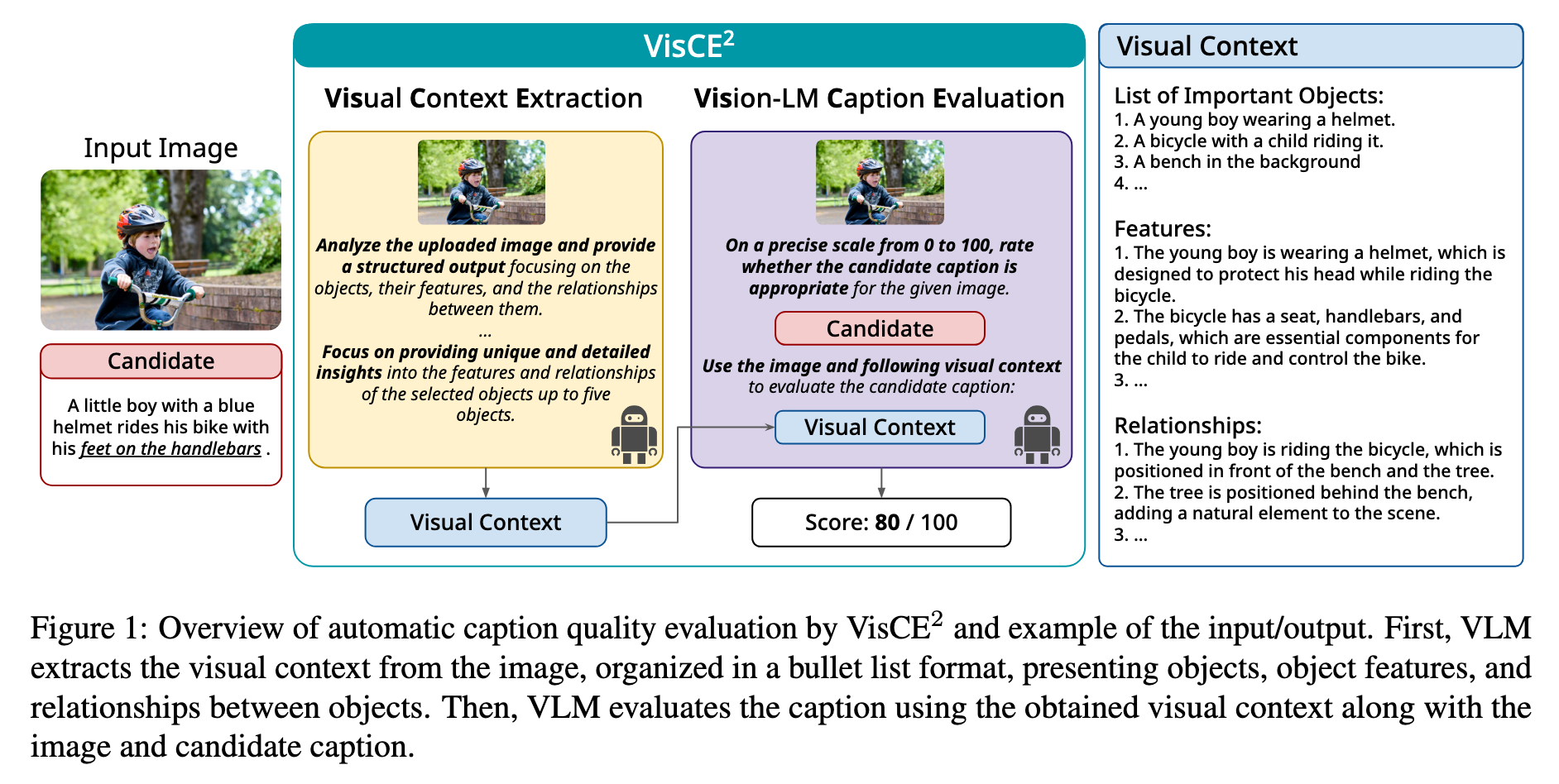

- In this paper, the authors have provided a simple but effective way to prompt VLM to do reference free image caption evaluation

- First, given the image, the VLM has to generate visual context, these visual contexts refer to objects, their attributes and their relationship with each other

- Second, given the image, the candidate caption and the visual context, the LLM has to score from 0 to 100 if the caption is right or not

- The authors have shown that using GPT4 as a VLM model can beat the SOTA score

Annotations

Files/VisionLanguageModelbased_2024/image-3-x62-y538.png

Files/VisionLanguageModelbased_2024/image-3-x62-y538.png

« We define visual context as the content of an image classified into objects, object attributes (including color, shape, and size), and relationships between objects following the similar notion of scene graphs »(3)

« We eliminated these canonical phrases in the postprocessing phase and designated the first integer value in the output sentences as the evaluation score »(3)

« In contrast, the other metrics, including our VisCE2, do not depend on such data-specific fine-tuning. »(4)

« Pearson correlation for measuring the linear correlation between two sets of data, Kendall’s Tau for measuring the ordinal association between two measured quantities and the accuracy as of the percentage of the correct pairwise ranking between two candidate captions »(4)

Date : 02-28-2024

Authors : Koki Maeda, Shuhei Kurita, Taiki Miyanishi, Naoaki Okazaki

Paper Link : http://arxiv.org/abs/2402.17969

Zotero Link: Preprint PDF

Tags : #/unread

Citation : @article{Maeda_Kurita_Miyanishi_Okazaki_2024, title={Vision Language Model-based Caption Evaluation Method Leveraging Visual Context Extraction}, url={http://arxiv.org/abs/2402.17969}, DOI={10.48550/arXiv.2402.17969}, abstractNote={Given the accelerating progress of vision and language modeling, accurate evaluation of machine-generated image captions remains critical. In order to evaluate captions more closely to human preferences, metrics need to discriminate between captions of varying quality and content. However, conventional metrics fail short of comparing beyond superficial matches of words or embedding similarities; thus, they still need improvement. This paper presents VisCE