Is a Question Decomposition Unit All We Need

Summary

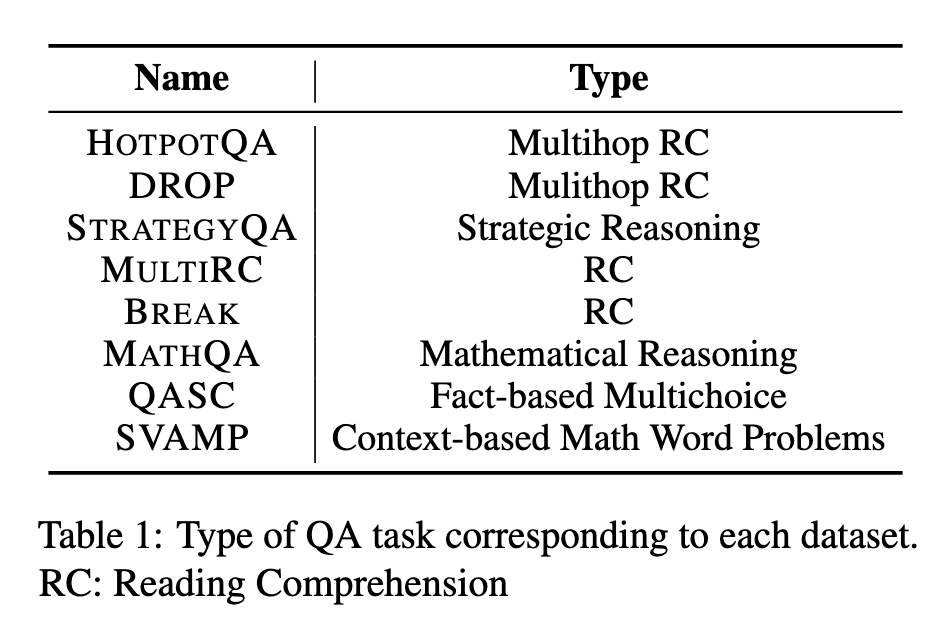

In this paper, the authors have shown the affect of question decomposition over 8 datasets. The decomposed questions they have generated are human annotated. They have used 50 data for each dataset, in total 400 data points.

Contributions:

- A very big contribution is the dataset

- The dataset is small of 50 data from 8 datasets = 400 data rows

- But, The dataset is human annotated, so a gold dataset for decomposed questions.

Limitations / Future Works

- Though this work provides a good decomposed dataset, but in real life its too expensive to create a human annotated decomposition for each dataset. so this paper lacks the automatic creation of decomposed question.

Annotations

« In a decomposition chain,if the answer in one step goes wrong, it propagatestill the end and the final prediction becomes wrong »(4)

Date : 12-01-2022

Authors : Pruthvi Patel, Swaroop Mishra, Mihir Parmar, Chitta Baral

Paper Link : https://aclanthology.org/2022.emnlp-main.302/

Zotero Link: Full Text PDF

Tags : ##p1, ##now

Citation : @inproceedings{Patel_Mishra_Parmar_Baral_2022, address={Abu Dhabi, United Arab Emirates}, title={Is a Question Decomposition Unit All We Need?}, url={https://aclanthology.org/2022.emnlp-main.302/}, DOI={10.18653/v1/2022.emnlp-main.302}, abstractNote={Large Language Models (LMs) have achieved state-of-the-art performance on many Natural Language Processing (NLP) benchmarks. With the growing number of new benchmarks, we build bigger and more complex LMs. However, building new LMs may not be an ideal option owing to the cost, time and environmental impact associated with it. We explore an alternative route: can we modify data by expressing it in terms of the model`s strengths, so that a question becomes easier for models to answer? We investigate if humans can decompose a hard question into a set of simpler questions that are relatively easier for models to solve. We analyze a range of datasets involving various forms of reasoning and find that it is indeed possible to significantly improve model performance (24% for GPT3 and 29% for RoBERTa-SQuAD along with a symbolic calculator) via decomposition. Our approach provides a viable option to involve people in NLP research in a meaningful way. Our findings indicate that Human-in-the-loop Question Decomposition (HQD) can potentially provide an alternate path to building large LMs.}, booktitle={Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing}, publisher={Association for Computational Linguistics}, author={Patel, Pruthvi and Mishra, Swaroop and Parmar, Mihir and Baral, Chitta}, editor={Goldberg, Yoav and Kozareva, Zornitsa and Zhang, Yue}, year={2022}, month=dec, pages={4553–4569} }